PLATFORMS

SOLUTIONS

BLOGS

CONTACT

☰

PLATFORMS

SOLUTIONS

BLOGS

CONTACT

☰

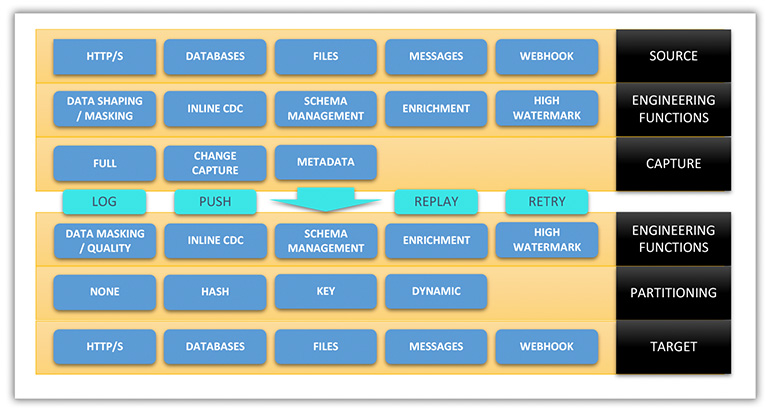

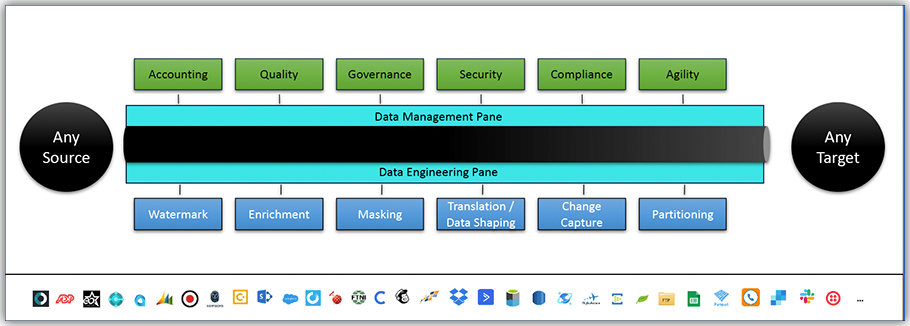

Think of it like a data pipeline within a data pipeline. DataZen gives you the flexibility to enrich your data as it moves from source to destination.

Track and quickly identify all changes to your data. In addition to validation and transparency, this also reduces integration work by weeks for every new integration you deploy.

Reduce the time, processing and network resources needed by tapping a more efficient data management process that ingests only the data you need.

Perform advanced translation, transformation, and schema management operations with pre-built data engineering functions and the power of SQL to shape your data.

Enable real-time data intelligence with data pipeline webhooks to ingest your data instantly and trigger downstream processes and refreshes of your AI and ML search engine.

Manage the environment yourself in VMs or bare metal

Bring the flexibility of containers to your organization

Create your data pipelines in our secure cloud environment

Why DataZen Is the Go-To Data Pipeline

All information regarding your data sources and destinations is available quickly and easily. See historical pipeline executions and hold your SaaS vendors accountable for performance and data quality.

Save considerable time and budget with a standardized integration approach, while accelerating your speed to market and productivity.

Managers can see historical events and monitor the entire data pipeline ecosystem, while developers and architects can manage connections and build their data pipeline processes.

Eliminate the guesswork. Staff members can be far more agile on productive work instead of repetitive processes and remedial fixes. Enable the data engineering with highly flexible and pre-built data engineering functions.

Access the Online Portal to request an online account and manage your cloud pipelines.

Online PortalArchitecture overview, job configuration settings, options, and monitoring.

Online DocumentationView the online documentation for our REST API to manage your data pipelines.

View Open API© 2025 - Enzo Unified